If you have a random sample drawn from a continuous uniform(a, b) distribution stored in an array x, the maximum likelihood estimate (MLE) for a is min(x) and the MLE for b is max(x).

Prove it to yourself

You can take a look at this Math StackExchange answer if you want to see the calculus, but you can prove it to yourself with a computer.

If you set a = 0.5 and b = 1.5, you can draw a sample with NumPy. You should compute the MLE directly for different sample sizes:

import numpy as np

a = 0.5

b = 1.5

n = 10000

for n in [10, 100, 1000, 10000]:

x = np.random.uniform(a, b, size=n)

print(x.min(), x.max())

# Expect something like...

# 0.5590952009638621 1.4469720006899172

# 0.5029192798674605 1.4866578874813645

# 0.5002904764114741 1.4994683757767997

# 0.5000709162887501 1.4999759888186115Notice, as the sample size gets bigger, the estimates get closer to their true values.

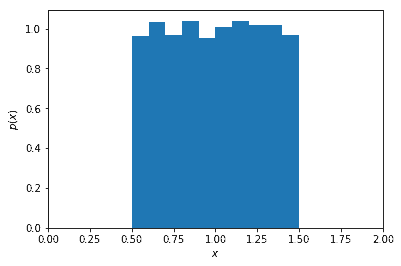

You can also see it visually by plotting a histogram of the random values with Matplotlib:

import matplotlib.pyplot as plt

plt.hist(x, density=True)

plt.xlim([0, 2])

plt.xlabel("$x$")

plt.ylabel("$p(x)$");

Finally, although it’s probably overkill in this case, you can maximize the likelihood numerically to see what the MLE is. Simply implement the log likelihood function. The log likelihood tends to be more stable than the likelihood function and the extrema will be the same. SciPy comes with several numerical optimization routines. They all minimize functions so you will need to minimize the negative log likelihood:

from scipy.optimize import differential_evolution

def log_likelihood(theta_hat):

a_hat, b_hat = theta_hat

return -n * np.log(b_hat - a_hat)

def negative_log_likelihood(theta_hat):

return -log_likelihood(theta_hat)

bounds = [[-1e10, x.min()], [x.max(), 1e10]]

differential_evolution(negative_log_likelihood, bounds=bounds)

# Expect something like...

# fun: -0.9537197940327512

# message: 'Optimization terminated successfully.'

# nfev: 4233

# nit: 140

# success: True

# x: array([0.50007057, 1.4999752 ])Remember that a is constrained to be between negative infinity and the minimum of the sample, while b is constrained to be between the maximum of the sample and infinity. I used 1e10 as a stand-in for infinity because the differential_evolution() function complained when I passed in np.inf. The result from this approach won’t be as good an estimate as computing it directly, but it will still improve as the sample size increases.

Discrete Uniform

What about the discrete uniform? In this case, the support only covers even integers, but the MLE is the same. You can tell it’s the same because the probability mass function has the same functional form as the probability density function in the continuous case.

Make sure to check out my gist on GitHub if you want to play around with this distribution.